Continuing with our discussion Kernel Boot, start_kernel() is implicit Process 0 (the PID was initialized to zero, lets not go looking for a process 0 with “ps”), AKA the “root thread”, the grand daddy of all processes to come. And the fall-back guy, as we will see.

Process 0 (PID 0, root thread) spawns off Process 1, known as the kernel_init (kernel thread) process, which will /sbin/init as the thread we just created (created, not scheduled to run.. not just yet).

Then the kernel process to start off other kernel processes is created (kthreadd) aka Process 2.

Process 1 (PID 1) could become the idle thread for the CPU when it executes.

Regardless, we then schedule() (we DID create a process or two hopefully). Nota Bene: when the /sbin/init kernel thread was created, we only have Processes 0 and 1 in the run queue. i.e. Process 1 becometh /sbin/init. Per 2.6.32.2 atleast and for awhile now.

As part of schedule(), processes may have pooped out or popped in, so we will probably find something to run, right ? After all we have just gone through, we have a right to expect to have something to run. Grins.

However, in the unlikely event that we (eventually) no-can-do schedule() in or out, then we cpu_idle for as long as it takes to find a process to run. Oh … this time, under the aegis of Process 0, which, by our own definition, is the last man standing. Also which, we are well aware of, cannot be “ps”-ed.

Please do note, a system-specific idle could have been created by /sbin/init, with PID being what we get, since the init thread and its children created could, in principle, have fork-ed till kingdom come.

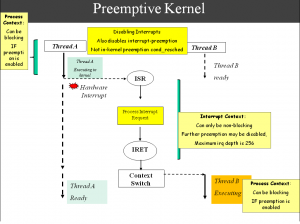

We did mention that we needed to, and actually did enable/disable, preemption along the way depending on whether we were ready to schedule() or not. Given that we are within the pale of initialization, and memory locations are of origins and values unknown, caution is indeed the better part of valor. So why not apply that principle to thread info’s also ? Right choice.

Such indeed are the joys of Linux Kernel Programming. Grins ?

We explain these specific Linux Kernel concepts and more in detail in my classes ( Advanced Linux Kernel Programming @UCSC-Extension), and also in other classes that I teach independently. Please take note, and take advantage also, of upcoming training sessions. As always, Feedback, Questions and Comments are appreciated and will be responded to.

Thanks

-Anand

Recent Comments