My dear Brethren and Fellow travellers in the land x86 and the Linux Kernel, it is time to come clean on all modes that extend x86 page sizes and physical memory addressing.

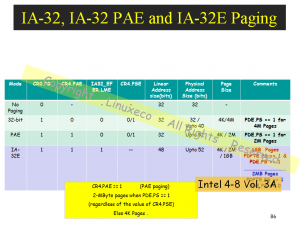

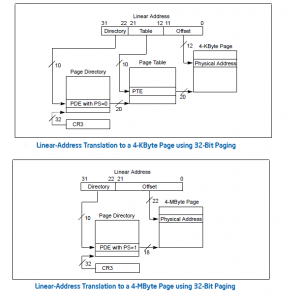

So here we go Ka Boom Ka Beem: In 32-bit non-PAE mode, PSE and PSE36 (AKA PSE40), whereby PSE36/40 is a cheap way to get the extended memory addressing for 64GB/1TB, 4K/4M Paging that we created in PSE (Really!) with 32-bit PGDs and PTEs.

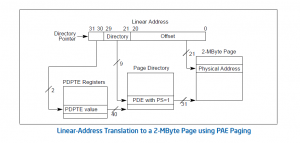

In 32-bit PAE mode, the architecture is 32-bit, but the PDPTEs, PDEs and PTEs extend to 64-bit, thereby extending Page sizes to 4K/2M (if PTE is not used), and memory addressing to 52-bits (4PB).

And lastly, but not leastly, we have IA-32E mode (true blue 64-bit architecture), with 48-bit linear addressing, 4K/2M/1G (if PDEs and PTEs are both not used) page sizes, 4PB of Memory addressing, and 64-bit PDPTEs, PDEs and PTEs.

John, did I get it all, and right also, this time ? DID you get the Cigar too ? Haaah ?

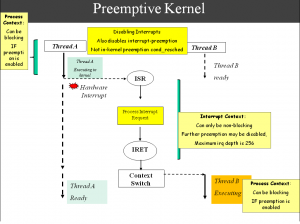

We all … must admit to an acute case of x86-itis, so the next post onwards, time to switch gears. We will start looking next at the various implications to the Kernel of some of these x86-isms for which we have espoused eloquence in this and prior posts.

I will also note that we do do a good job of explaining these concepts in some of our training sessions, this feedback measured by course evaluations, and client (some of which are ISPs) feedbacks also.

I do hope everyone enjoyed this post. Thanks again

Recent Comments