Kernel preemption tries to ensure fair usage of limited CPU resources. One way to understand kernel preemption is to explore its opposite. i.e. the way it WAS before the 2.6 linux kernel (which is preemptive). Well, the way it WAS .. was a cooperative world: A Process that got the CPU resources was expected to play nine-nine, and let cooperatively hand over the cpu (i.e. at EXIT system call), or the kernel could also, when it switched to user-mode, decide to schedule a new process. Processes were expected to be graceful in letting others use CPU resources.

Processes had to deal with many issues to try to ensure this model “worked”, however in additional to some hard problems created , there are some architectural features that just plumb stall out CPU resources and ensure suboptimal CPU resource usage regardless of whatever processes could have done about it. In other words, Processes do not have visibility into the underlying mechanisms of the operating system / kernel itself.

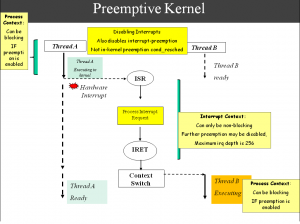

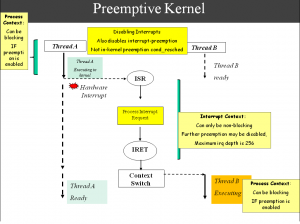

Linux Kernel preemption ensures what I consider to be somewhat fair (aka somewhat arbitrary) reallocation of CPU resources between more processes with “all the knowledge under the sun” on the underlying “goings on”. As an example, if the Kernel KNOWs the system is taking interrupts at HZ rate (see blog below), then why not try to prioritize between existing processes, and give others a chance to run ? If the Kernel KNOWS a process is to stall on a resource that may take some time to come available…why not put the process to “sleep” and “wake” someone fortunate process up ?

Well.. there are many reasons to NOT do that also (if processes are Real-time processes for example). Or to prevent “lockouts” etc etc

In the end, it boils down to a tradeoff between latency for the lucky few, .vs. throughput for the very many. And all shades in between, with considerations galore: A few of which are listed below->

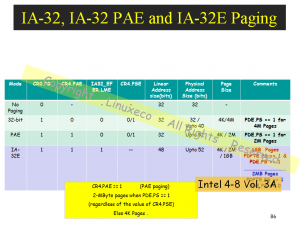

Explicit and Implicit “Blocking”, Critical Code Section Synchronizations, Network and Block Device Processing Latencies and Throughputs, Interrupt Latencies, “Deferred Processing”, Safety in Preemptability (preventing lockouts because we have preempted tasks that should not have been), and there denials of preemptions / recursive depths of denials, relationships to interrups and recursive relationships to the above, system-programming architectural considerations and requirements (Scheduler Priorities, Classes etc), SMP / Cross Processor considerations, memory management, x86 Architectural considerations in Interrupt Latencies etc etc

Again, all this is probably a good review for our past students. We explain these specific x86 features, Linux Kernel concepts and more in detail in my classes ( Advanced Linux Kernel Programming @UCSC-Extension), and also in other classes that I teach independently. Please take note, and take advantage also, of upcoming training sessions. As always, Feedback, Questions and Comments are appreciated and will be responded to.

Recent Comments