At the onset, please accept my apologies for the X86 protection mechanisms.

I did not create it. It IS difficult to understand (huge understatement). It IS elegant also (Oucchh). I am merely a messenger that is making an attempt at explaining it. The complaint department on the x86 architecture is with the FBI.. just kidding.

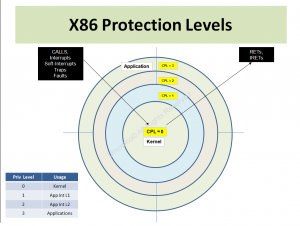

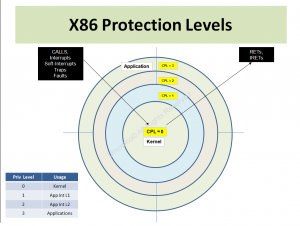

X86 protections revolve around the Current Privilege Level (CPL). CPL is a two-bit field that can take on four privilege levels, 0,1,2,3.

NOTE here please the types of instructions that enable moves between different privilege levels etc.

A future post will follow that describes just how and when the CPL changes, but for now let us take for granted the following: That when x86 processors wake up, they are in in REAL MODE (Give Me Your Real Mode), and CPL is set to numerical “0″. This is the Highest Privilege a x86 processor can operate at, and the Linux Kernel operates at this level. All applications on x86 processors operate at Level 3.

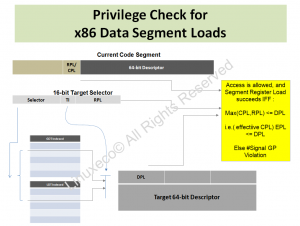

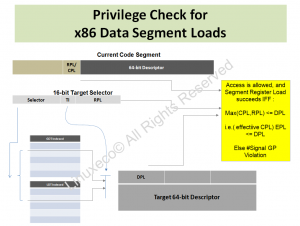

This article focuses on a MOV into a Data Segment register (accomplished with a “MOV DS,AX” for example), and the protection mechanisms associated with that.

Note that a Code Segment CS is “just” another segment register, however a MOV into CS is done with a “transfer control” instruction (CALL FAR, JMP, Software-INT, RET, IRET etc), or an Asynchronous Event (Hardware Interrupt).

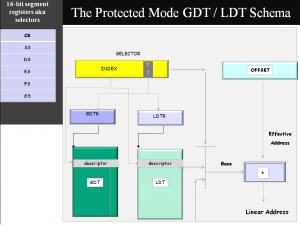

In the MOV DS, AX, the AX contains a 16-bit SELECTOR (Bits 1:0), which has a two-bit Requestor Privilege Level (RPL) Value. As explained in an earlier post, the “TI” bit of that SELECTOR has a Table Indicator Bit (“TI”) that is used to select wither the GDT (Global Descriptor Table) or the LDT. The LDT and GDT point to arrays of 8-byte DESCRIPTORs, and one of these DESCRIPTORs is chosen by the SELECTOR to be loaded into the Segment Register DS.

That Chosen DESCRIPTOR has a two-bit (surprise surprise) DPL (Descriptor Privilege Level) field.

SO … the major “players” in Protection Mechanisms are the CPL, DPL and the RPL.

Here, it is important to note the special role that the Segment SELECTOR plays.

In addition to identifying the GDT or the LDT that will be used (as indicated by the “TI” bit of the SELECTOR), and the descriptor itself within the GDT or LDT (as identified by SELECTOR[15:3]), the RPL fiels of the selector plays a role of “WEAKENING” the CPL (Current Privilege Level).

The thinking is that the RPL represents a Privilege level of sorts, and the numerically larger of CPL and SELECTOR’s RPL is the CPL.Effective (Effective CPL) for the purposes of the Segment register Load.

The “MOV DS, AX” (into Segment Register) sets up the memory space that will become accessible to the program doing the MOV DS. The protection check performed (CPL.effective <= Descriptor.DPL) will either enable the MOV DS.

IF the Protection checks fail for the MOV DS, the processor hardware will generate a #GP (General Protection) Fault.

This methodology for Segment register Loads is used, with some modifications, for the gamut of Segment register Loads within X86, wherever they may happen.

“Far” Jumps etc load the Code Segment (CS), when there is a change in Privilege Levels, or on a MOV SS, the stack segment is loaded. On a MOV SS, the protection fault generated on protection failure is the Stack Fault (#SS).

From the above, it become clear that the Kernel has full and complete access to the User Mode memory. This is a capability that is necessary, for example, when in response to a READ system call, the Kernel reads data from Disks (In Privilege Level 0), then copies the Data into User Space (operating in Privilege Level 3). But the vice versa is not true.

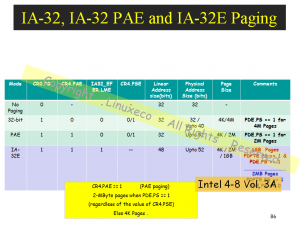

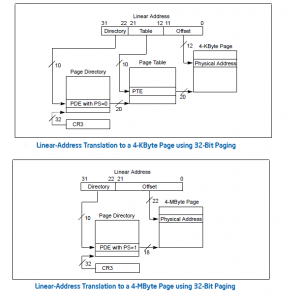

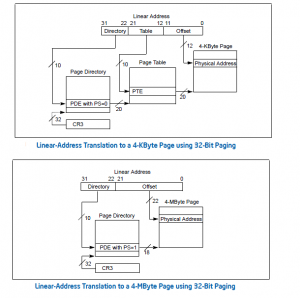

Understanding the Linux x86 Server memory model is not possible without understanding x86 Segmentation, the Protection Mechanisms, and the Paging /TLB Mechanisms (To be discussed later).

Recent Comments