It is normal to reason, as we explain in our courses, that the Linux Kernel may organize atleast a “few” pages as 4M pages. It can always be argued that we need not have enabled 4M paging, but that is not the point here. We need to figure out how to have our cake and eat it too. Hopefully.

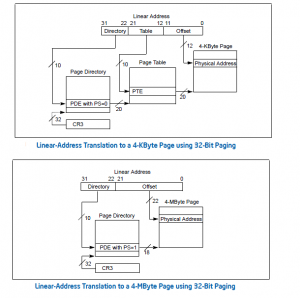

SO…you guessed it, that when push comes to shove, and for reasons that are as good as the one I can come up with to the contrary, the buddy allocator becomes the bussting allocator bussting up these 4M pages into teeny weeny 4K pages that we really needed to allocate to in the first place. And then … needless to say, performance hell breaks loose with TLB flushes following the reset of ANY PDT’s “PS” bit (Just in case you missed this on 60-minutes, Intel requires TLB Flushes since the behavior for pages left in TLBs …with PS bits in the PDT getting the “Reset” treatment on the likes of page-splits etc etc.. is IMPLEMENTATION SPECIFIC). I call that a bug-turned-nonfeature. Lets move on, shall we ?

You may argue that one busted, forever split, but we do know that the Buddy allocator will not let bygones be bygones, right ? setting the PDT “PS” bit again on Buddy Order-Coalescing etc.

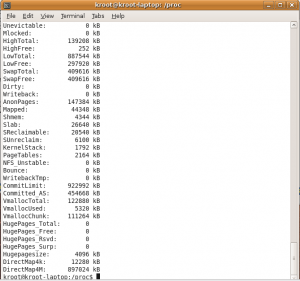

The questions apply to PAE mode, and to 64-bit modes also. I went looking to see just what % of free pages were 4M pages in my VM (NO PAE compile option), 4M Pages enabled, here it is->

We all know the benefits of enabling 4M Pages yak yak, but how about performance lost on TLB entries Flushes when just one or just about one of these Huge Pages is split up ? Data we just presented seems to suggest that if we enable 4M Pages, performance loss would be as probabilistic as rain in the Rain Forests. Especially for “Higher Performance” Systems at the cusp of 4K and 2M/4M page sizes. I mean, being on one side of the camp (4K only) or the other (2M/4M) is so much more preferable. But reality rarely obliges our whims and wishes. And the trend to disappoint in that regards will continue we surmise.

While the issues apply to PAE and 64-bit addressing modes as well, a pic of the 32-bit 4K to 4M paging (PDT.PS) transition based on the PDT “PS” is included here ->

After all this, is there any way of measuring dynamic load-based performance loss due to TLB Flushes against prevailing contexts of page allocations ? We do know it would be “traffic-dependent”, especially for caching and web Servers, where performance losses would, in all probability, be most noticable and critically offensive also. Is there any way for lesser machines to take advantage of 2/4M Page sizes, and not burden a perhaps predominantly 4K allocation ? And vice versa also ? Or do we just have to have customized and predetermined “preallocations” of Huge/4K Pages based on application-level heuristics ? And if so, how is that to be accomplished if at all ?

We discuss Linux Kernel concepts and more in my classes with code walk throughs and programming assignments ( Advanced Linux Kernel Programming @UCSC-Extension, and also in other classes that I teach independently). Please take note of upcoming training sessions. As always, Feedback, Questions and Comments are appreciated and will be responded to.

Recent Comments